vdanilov:main

from

vdanilov:mmdetection_pipeline

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

In the interest of fostering an open and welcoming environment, we as contributors and maintainers pledge to making participation in our project and our community a harassment-free experience for everyone, regardless of age, body size, disability, ethnicity, sex characteristics, gender identity and expression, level of experience, education, socio-economic status, nationality, personal appearance, race, religion, or sexual identity and orientation.

Examples of behavior that contributes to creating a positive environment include:

Examples of unacceptable behavior by participants include:

Project maintainers are responsible for clarifying the standards of acceptable behavior and are expected to take appropriate and fair corrective action in response to any instances of unacceptable behavior.

Project maintainers have the right and responsibility to remove, edit, or reject comments, commits, code, wiki edits, issues, and other contributions that are not aligned to this Code of Conduct, or to ban temporarily or permanently any contributor for other behaviors that they deem inappropriate, threatening, offensive, or harmful.

This Code of Conduct applies both within project spaces and in public spaces when an individual is representing the project or its community. Examples of representing a project or community include using an official project e-mail address, posting via an official social media account, or acting as an appointed representative at an online or offline event. Representation of a project may be further defined and clarified by project maintainers.

Instances of abusive, harassing, or otherwise unacceptable behavior may be reported by contacting the project team at chenkaidev@gmail.com. All complaints will be reviewed and investigated and will result in a response that is deemed necessary and appropriate to the circumstances. The project team is obligated to maintain confidentiality with regard to the reporter of an incident. Further details of specific enforcement policies may be posted separately.

Project maintainers who do not follow or enforce the Code of Conduct in good faith may face temporary or permanent repercussions as determined by other members of the project's leadership.

This Code of Conduct is adapted from the Contributor Covenant, version 1.4, available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

For answers to common questions about this code of conduct, see https://www.contributor-covenant.org/faq

We appreciate all contributions to improve MMDetection. Please refer to CONTRIBUTING.md in MMCV for more details about the contributing guideline.

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

Thanks for your contribution and we appreciate it a lot. The following instructions would make your pull request more healthy and more easily get feedback. If you do not understand some items, don't worry, just make the pull request and seek help from maintainers.

Please describe the motivation of this PR and the goal you want to achieve through this PR.

Please briefly describe what modification is made in this PR.

Does the modification introduce changes that break the backward-compatibility of the downstream repos? If so, please describe how it breaks the compatibility and how the downstream projects should modify their code to keep compatibility with this PR.

If this PR introduces a new feature, it is better to list some use cases here, and update the documentation.

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

📘Documentation | 🛠️Installation | 👀Model Zoo | 🆕Update News | 🚀Ongoing Projects | 🤔Reporting Issues

English | 简体中文

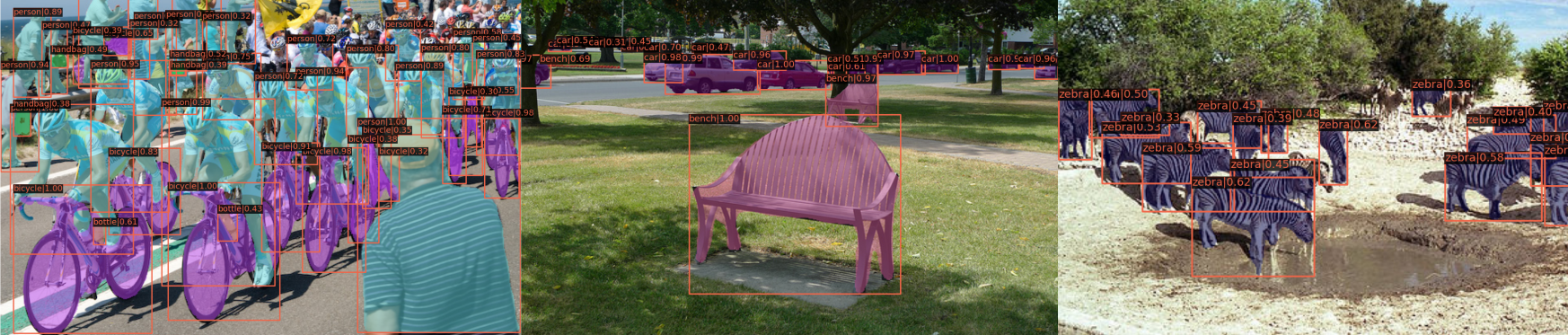

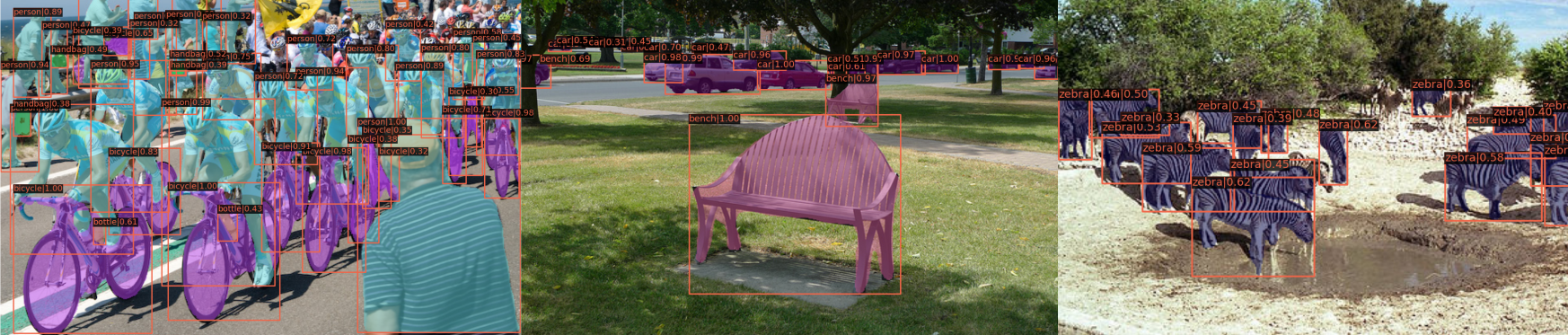

MMDetection is an open source object detection toolbox based on PyTorch. It is a part of the OpenMMLab project.

The master branch works with PyTorch 1.5+.

Modular Design

We decompose the detection framework into different components and one can easily construct a customized object detection framework by combining different modules.

Support of multiple frameworks out of box

The toolbox directly supports popular and contemporary detection frameworks, e.g. Faster RCNN, Mask RCNN, RetinaNet, etc.

High efficiency

All basic bbox and mask operations run on GPUs. The training speed is faster than or comparable to other codebases, including Detectron2, maskrcnn-benchmark and SimpleDet.

State of the art

The toolbox stems from the codebase developed by the MMDet team, who won COCO Detection Challenge in 2018, and we keep pushing it forward.

Apart from MMDetection, we also released a library mmcv for computer vision research, which is heavily depended on by this toolbox.

2.28.1 was released in 1/2/2023:

Please refer to changelog.md for details and release history.

For compatibility changes between different versions of MMDetection, please refer to compatibility.md.

We are excited to announce our latest work on real-time object recognition tasks, RTMDet, a family of fully convolutional single-stage detectors. RTMDet not only achieves the best parameter-accuracy trade-off on object detection from tiny to extra-large model sizes but also obtains new state-of-the-art performance on instance segmentation and rotated object detection tasks. Details can be found in the technical report. Pre-trained models are here.

| Task | Dataset | AP | FPS(TRT FP16 BS1 3090) |

|---|---|---|---|

| Object Detection | COCO | 52.8 | 322 |

| Instance Segmentation | COCO | 44.6 | 188 |

| Rotated Object Detection | DOTA | 78.9(single-scale)/81.3(multi-scale) | 121 |

A brand new version of MMDetection v3.0.0rc5 was released in 26/12/2022:

Find more new features in 3.x branch. Issues and PRs are welcome!

Please refer to Installation for installation instructions.

Please see get_started.md for the basic usage of MMDetection. We provide colab tutorial and instance segmentation colab tutorial, and other tutorials for:

Results and models are available in the model zoo.

| Backbones | Necks | Loss | Common |

|

Some other methods are also supported in projects using MMDetection.

Please refer to FAQ for frequently asked questions.

We appreciate all contributions to improve MMDetection. Ongoing projects can be found in out GitHub Projects. Welcome community users to participate in these projects. Please refer to CONTRIBUTING.md for the contributing guideline.

MMDetection is an open source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks. We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new detectors.

If you use this toolbox or benchmark in your research, please cite this project.

@article{mmdetection,

title = {{MMDetection}: Open MMLab Detection Toolbox and Benchmark},

author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

journal= {arXiv preprint arXiv:1906.07155},

year={2019}

}

This project is released under the Apache 2.0 license.

English | 简体中文

MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 OpenMMLab 项目的一部分。

主分支代码目前支持 PyTorch 1.5 以上的版本。

模块化设计

MMDetection 将检测框架解耦成不同的模块组件,通过组合不同的模块组件,用户可以便捷地构建自定义的检测模型

丰富的即插即用的算法和模型

MMDetection 支持了众多主流的和最新的检测算法,例如 Faster R-CNN,Mask R-CNN,RetinaNet 等。

速度快

基本的框和 mask 操作都实现了 GPU 版本,训练速度比其他代码库更快或者相当,包括 Detectron2, maskrcnn-benchmark 和 SimpleDet。

性能高

MMDetection 这个算法库源自于 COCO 2018 目标检测竞赛的冠军团队 MMDet 团队开发的代码,我们在之后持续进行了改进和提升。

除了 MMDetection 之外,我们还开源了计算机视觉基础库 MMCV,MMCV 是 MMDetection 的主要依赖。

最新的 2.28.1 版本已经在 2023.2.1 发布:

如果想了解更多版本更新细节和历史信息,请阅读更新日志。

如果想了解 MMDetection 不同版本之间的兼容性, 请参考兼容性说明文档。

我们很高兴向大家介绍我们在实时目标识别任务方面的最新成果 RTMDet,包含了一系列的全卷积单阶段检测模型。 RTMDet 不仅在从 tiny 到 extra-large 尺寸的目标检测模型上实现了最佳的参数量和精度的平衡,而且在实时实例分割和旋转目标检测任务上取得了最先进的成果。 更多细节请参阅技术报告。 预训练模型可以在这里找到。

| Task | Dataset | AP | FPS(TRT FP16 BS1 3090) |

|---|---|---|---|

| Object Detection | COCO | 52.8 | 322 |

| Instance Segmentation | COCO | 44.6 | 188 |

| Rotated Object Detection | DOTA | 78.9(single-scale)/81.3(multi-scale) | 121 |

全新的 v3.0.0rc5 版本已经在 2022.12.26 发布:

请参考安装指令进行安装。

请参考快速入门文档学习 MMDetection 的基本使用。 我们提供了 检测的 colab 教程 和 实例分割的 colab 教程,也为新手提供了完整的运行教程,其他教程如下

同时,我们还提供了 MMDetection 中文解读文案汇总

测试结果和模型可以在模型库中找到。

| Backbones | Necks | Loss | Common |

|

我们在基于 MMDetection 的项目中列举了一些其他的支持的算法。

请参考 FAQ 了解其他用户的常见问题。

我们感谢所有的贡献者为改进和提升 MMDetection 所作出的努力。我们将正在进行中的项目添加进了GitHub Projects页面,非常欢迎社区用户能参与进这些项目中来。请参考贡献指南来了解参与项目贡献的相关指引。

MMDetection 是一款由来自不同高校和企业的研发人员共同参与贡献的开源项目。我们感谢所有为项目提供算法复现和新功能支持的贡献者,以及提供宝贵反馈的用户。 我们希望这个工具箱和基准测试可以为社区提供灵活的代码工具,供用户复现已有算法并开发自己的新模型,从而不断为开源社区提供贡献。

如果你在研究中使用了本项目的代码或者性能基准,请参考如下 bibtex 引用 MMDetection。

@article{mmdetection,

title = {{MMDetection}: Open MMLab Detection Toolbox and Benchmark},

author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

journal= {arXiv preprint arXiv:1906.07155},

year={2019}

}

该项目采用 Apache 2.0 开源许可证。

扫描下方的二维码可关注 OpenMMLab 团队的 知乎官方账号,加入 OpenMMLab 团队的官方交流 QQ 群

我们会在 OpenMMLab 社区为大家

干货满满 📘,等你来撩 💗,OpenMMLab 社区期待您的加入 👬

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

Data augmentation is a commonly used technique for increasing both the size and the diversity of labeled training sets by leveraging input transformations that preserve output labels. In computer vision domain, image augmentations have become a common implicit regularization technique to combat overfitting in deep convolutional neural networks and are ubiquitously used to improve performance. While most deep learning frameworks implement basic image transformations, the list is typically limited to some variations and combinations of flipping, rotating, scaling, and cropping. Moreover, the image processing speed varies in existing tools for image augmentation. We present Albumentations, a fast and flexible library for image augmentations with many various image transform operations available, that is also an easy-to-use wrapper around other augmentation libraries. We provide examples of image augmentations for different computer vision tasks and show that Albumentations is faster than other commonly used image augmentation tools on the most of commonly used image transformations.

| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

|---|---|---|---|---|---|---|---|---|

| R-50 | pytorch | 1x | 4.4 | 16.6 | 38.0 | 34.5 | config | model | log |

@article{2018arXiv180906839B,

author = {A. Buslaev, A. Parinov, E. Khvedchenya, V.~I. Iglovikov and A.~A. Kalinin},

title = "{Albumentations: fast and flexible image augmentations}",

journal = {ArXiv e-prints},

eprint = {1809.06839},

year = 2018

}

|

1

|

|

Object detection has been dominated by anchor-based detectors for several years. Recently, anchor-free detectors have become popular due to the proposal of FPN and Focal Loss. In this paper, we first point out that the essential difference between anchor-based and anchor-free detection is actually how to define positive and negative training samples, which leads to the performance gap between them. If they adopt the same definition of positive and negative samples during training, there is no obvious difference in the final performance, no matter regressing from a box or a point. This shows that how to select positive and negative training samples is important for current object detectors. Then, we propose an Adaptive Training Sample Selection (ATSS) to automatically select positive and negative samples according to statistical characteristics of object. It significantly improves the performance of anchor-based and anchor-free detectors and bridges the gap between them. Finally, we discuss the necessity of tiling multiple anchors per location on the image to detect objects. Extensive experiments conducted on MS COCO support our aforementioned analysis and conclusions. With the newly introduced ATSS, we improve state-of-the-art detectors by a large margin to 50.7% AP without introducing any overhead.

| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

|---|---|---|---|---|---|---|---|

| R-50 | pytorch | 1x | 3.7 | 19.7 | 39.4 | config | model | log |

| R-101 | pytorch | 1x | 5.6 | 12.3 | 41.5 | config | model | log |

@article{zhang2019bridging,

title = {Bridging the Gap Between Anchor-based and Anchor-free Detection via Adaptive Training Sample Selection},

author = {Zhang, Shifeng and Chi, Cheng and Yao, Yongqiang and Lei, Zhen and Li, Stan Z.},

journal = {arXiv preprint arXiv:1912.02424},

year = {2019}

}

|

1

|

|

|

1

|

|

|

1

|

|

AutoAssign: Differentiable Label Assignment for Dense Object Detection

Determining positive/negative samples for object detection is known as label assignment. Here we present an anchor-free detector named AutoAssign. It requires little human knowledge and achieves appearance-aware through a fully differentiable weighting mechanism. During training, to both satisfy the prior distribution of data and adapt to category characteristics, we present Center Weighting to adjust the category-specific prior distributions. To adapt to object appearances, Confidence Weighting is proposed to adjust the specific assign strategy of each instance. The two weighting modules are then combined to generate positive and negative weights to adjust each location's confidence. Extensive experiments on the MS COCO show that our method steadily surpasses other best sampling strategies by large margins with various backbones. Moreover, our best model achieves 52.1% AP, outperforming all existing one-stage detectors. Besides, experiments on other datasets, e.g., PASCAL VOC, Objects365, and WiderFace, demonstrate the broad applicability of AutoAssign.

| Backbone | Style | Lr schd | Mem (GB) | box AP | Config | Download |

|---|---|---|---|---|---|---|

| R-50 | caffe | 1x | 4.08 | 40.4 | config | model | log |

Note:

@article{zhu2020autoassign,

title={AutoAssign: Differentiable Label Assignment for Dense Object Detection},

author={Zhu, Benjin and Wang, Jianfeng and Jiang, Zhengkai and Zong, Fuhang and Liu, Songtao and Li, Zeming and Sun, Jian},

journal={arXiv preprint arXiv:2007.03496},

year={2020}

}

|

1

|

|

|

1

|

|

Feature upsampling is a key operation in a number of modern convolutional network architectures, e.g. feature pyramids. Its design is critical for dense prediction tasks such as object detection and semantic/instance segmentation. In this work, we propose Content-Aware ReAssembly of FEatures (CARAFE), a universal, lightweight and highly effective operator to fulfill this goal. CARAFE has several appealing properties: (1) Large field of view. Unlike previous works (e.g. bilinear interpolation) that only exploit sub-pixel neighborhood, CARAFE can aggregate contextual information within a large receptive field. (2) Content-aware handling. Instead of using a fixed kernel for all samples (e.g. deconvolution), CARAFE enables instance-specific content-aware handling, which generates adaptive kernels on-the-fly. (3) Lightweight and fast to compute. CARAFE introduces little computational overhead and can be readily integrated into modern network architectures. We conduct comprehensive evaluations on standard benchmarks in object detection, instance/semantic segmentation and inpainting. CARAFE shows consistent and substantial gains across all the tasks (1.2%, 1.3%, 1.8%, 1.1db respectively) with negligible computational overhead. It has great potential to serve as a strong building block for future research. It has great potential to serve as a strong building block for future research.

The results on COCO 2017 val is shown in the below table.

| Method | Backbone | Style | Lr schd | Test Proposal Num | Inf time (fps) | Box AP | Mask AP | Config | Download |

|---|---|---|---|---|---|---|---|---|---|

| Faster R-CNN w/ CARAFE | R-50-FPN | pytorch | 1x | 1000 | 16.5 | 38.6 | 38.6 | config | model | log |

| - | - | - | - | 2000 | |||||

| Mask R-CNN w/ CARAFE | R-50-FPN | pytorch | 1x | 1000 | 14.0 | 39.3 | 35.8 | config | model | log |

| - | - | - | - | 2000 |

The CUDA implementation of CARAFE can be find at https://github.com/myownskyW7/CARAFE.

We provide config files to reproduce the object detection & instance segmentation results in the ICCV 2019 Oral paper for CARAFE: Content-Aware ReAssembly of FEatures.

@inproceedings{Wang_2019_ICCV,

title = {CARAFE: Content-Aware ReAssembly of FEatures},

author = {Wang, Jiaqi and Chen, Kai and Xu, Rui and Liu, Ziwei and Loy, Chen Change and Lin, Dahua},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {October},

year = {2019}

}

|

1

|

|

|

1

|

|

|

1

|

|

Cascade R-CNN: High Quality Object Detection and Instance Segmentation

In object detection, the intersection over union (IoU) threshold is frequently used to define positives/negatives. The threshold used to train a detector defines its quality. While the commonly used threshold of 0.5 leads to noisy (low-quality) detections, detection performance frequently degrades for larger thresholds. This paradox of high-quality detection has two causes: 1) overfitting, due to vanishing positive samples for large thresholds, and 2) inference-time quality mismatch between detector and test hypotheses. A multi-stage object detection architecture, the Cascade R-CNN, composed of a sequence of detectors trained with increasing IoU thresholds, is proposed to address these problems. The detectors are trained sequentially, using the output of a detector as training set for the next. This resampling progressively improves hypotheses quality, guaranteeing a positive training set of equivalent size for all detectors and minimizing overfitting. The same cascade is applied at inference, to eliminate quality mismatches between hypotheses and detectors. An implementation of the Cascade R-CNN without bells or whistles achieves state-of-the-art performance on the COCO dataset, and significantly improves high-quality detection on generic and specific object detection datasets, including VOC, KITTI, CityPerson, and WiderFace. Finally, the Cascade R-CNN is generalized to instance segmentation, with nontrivial improvements over the Mask R-CNN.

| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | Config | Download |

|---|---|---|---|---|---|---|---|

| R-50-FPN | caffe | 1x | 4.2 | 40.4 | config | model | log | |

| R-50-FPN | pytorch | 1x | 4.4 | 16.1 | 40.3 | config | model | log |

| R-50-FPN | pytorch | 20e | - | - | 41.0 | config | model | log |

| R-101-FPN | caffe | 1x | 6.2 | 42.3 | config | model | log | |

| R-101-FPN | pytorch | 1x | 6.4 | 13.5 | 42.0 | config | model | log |

| R-101-FPN | pytorch | 20e | - | - | 42.5 | config | model | log |

| X-101-32x4d-FPN | pytorch | 1x | 7.6 | 10.9 | 43.7 | config | model | log |

| X-101-32x4d-FPN | pytorch | 20e | 7.6 | 43.7 | config | model | log | |

| X-101-64x4d-FPN | pytorch | 1x | 10.7 | 44.7 | config | model | log | |

| X-101-64x4d-FPN | pytorch | 20e | 10.7 | 44.5 | config | model | log |

| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

|---|---|---|---|---|---|---|---|---|

| R-50-FPN | caffe | 1x | 5.9 | 41.2 | 36.0 | config | model | log | |

| R-50-FPN | pytorch | 1x | 6.0 | 11.2 | 41.2 | 35.9 | config | model | log |

| R-50-FPN | pytorch | 20e | - | - | 41.9 | 36.5 | config | model | log |

| R-101-FPN | caffe | 1x | 7.8 | 43.2 | 37.6 | config | model | log | |

| R-101-FPN | pytorch | 1x | 7.9 | 9.8 | 42.9 | 37.3 | config | model | log |

| R-101-FPN | pytorch | 20e | - | - | 43.4 | 37.8 | config | model | log |

| X-101-32x4d-FPN | pytorch | 1x | 9.2 | 8.6 | 44.3 | 38.3 | config | model | log |

| X-101-32x4d-FPN | pytorch | 20e | 9.2 | - | 45.0 | 39.0 | config | model | log |

| X-101-64x4d-FPN | pytorch | 1x | 12.2 | 6.7 | 45.3 | 39.2 | config | model | log |

| X-101-64x4d-FPN | pytorch | 20e | 12.2 | 45.6 | 39.5 | config | model | log |

Notes:

20e schedule in Cascade (Mask) R-CNN indicates decreasing the lr at 16 and 19 epochs, with a total of 20 epochs.We also train some models with longer schedules and multi-scale training for Cascade Mask R-CNN. The users could finetune them for downstream tasks.

| Backbone | Style | Lr schd | Mem (GB) | Inf time (fps) | box AP | mask AP | Config | Download |

|---|---|---|---|---|---|---|---|---|

| R-50-FPN | caffe | 3x | 5.7 | 44.0 | 38.1 | config | model | log | |

| R-50-FPN | pytorch | 3x | 5.9 | 44.3 | 38.5 | config | model | log | |

| R-101-FPN | caffe | 3x | 7.7 | 45.4 | 39.5 | config | model | log | |

| R-101-FPN | pytorch | 3x | 7.8 | 45.5 | 39.6 | config | model | log | |

| X-101-32x4d-FPN | pytorch | 3x | 9.0 | 46.3 | 40.1 | config | model | log | |

| X-101-32x8d-FPN | pytorch | 3x | 12.1 | 46.1 | 39.9 | config | model | log | |

| X-101-64x4d-FPN | pytorch | 3x | 12.0 | 46.6 | 40.3 | config | model | log |

@article{Cai_2019,

title={Cascade R-CNN: High Quality Object Detection and Instance Segmentation},

ISSN={1939-3539},

url={http://dx.doi.org/10.1109/tpami.2019.2956516},

DOI={10.1109/tpami.2019.2956516},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

publisher={Institute of Electrical and Electronics Engineers (IEEE)},

author={Cai, Zhaowei and Vasconcelos, Nuno},

year={2019},

pages={1–1}

}

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|

|

1

|

|