Are you sure you want to delete this access key?

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easily understandable. Pull requests very welcome!

Docs • Popular imodels • Custom imodels • Demo notebooks

Implementations of different interpretable models, all compatible with scikit-learn. The interpretable models can be easily used and installed:

from imodels import BayesianRuleListClassifier, GreedyRuleListClassifier, SkopeRulesClassifier

from imodels import SLIMRegressor, RuleFitRegressor

model = BayesianRuleListClassifier() # initialize a model

model.fit(X_train, y_train) # fit model

preds = model.predict(X_test) # discrete predictions: shape is (n_test, 1)

preds_proba = model.predict_proba(X_test) # predicted probabilities: shape is (n_test, n_classes)

Install with pip install imodels (see here for help). Contains the following models:

| Model | Reference | Description |

|---|---|---|

| Rulefit rule set | 🗂️, 🔗, 📄 | Extracts rules from a decision tree then builds a sparse linear model with them |

| Skope rule set | 🗂️, 🔗 | Extracts rules from gradient-boosted trees, deduplicates them, then forms a linear combination of them based on their OOB precision |

| Boosted rule set | 🗂️, 🔗, 📄 | Uses Adaboost to sequentially learn a set of rules |

| Bayesian rule list | 🗂️, 🔗, 📄 | Learns a compact rule list by sampling rule lists (rather than using a greedy heuristic) |

| Greedy rule list | 🗂️, 🔗 | Uses CART to learn a list (only a single path), rather than a decision tree |

| OneR rule list | 🗂️, 📄 | Learns rule list restricted to only one feature |

| Optimal rule tree | 🗂️, 🔗, 📄 | (In progress) Learns succinct trees using global optimization rather than greedy heuristics |

| Iterative random forest | 🗂️, 🔗, 📄 | (In progress) Repeatedly fit random forest, giving features with high importance a higher chance of being selected. |

| Sparse integer linear model | 🗂️, 📄 | Forces coefficients to be integers |

| Rule sets | ⌛ | (Coming soon) Many popular rule sets including SLIPPER, Lightweight Rule Induction, MLRules |

Docs 🗂️, Reference code implementation 🔗, Research paper 📄

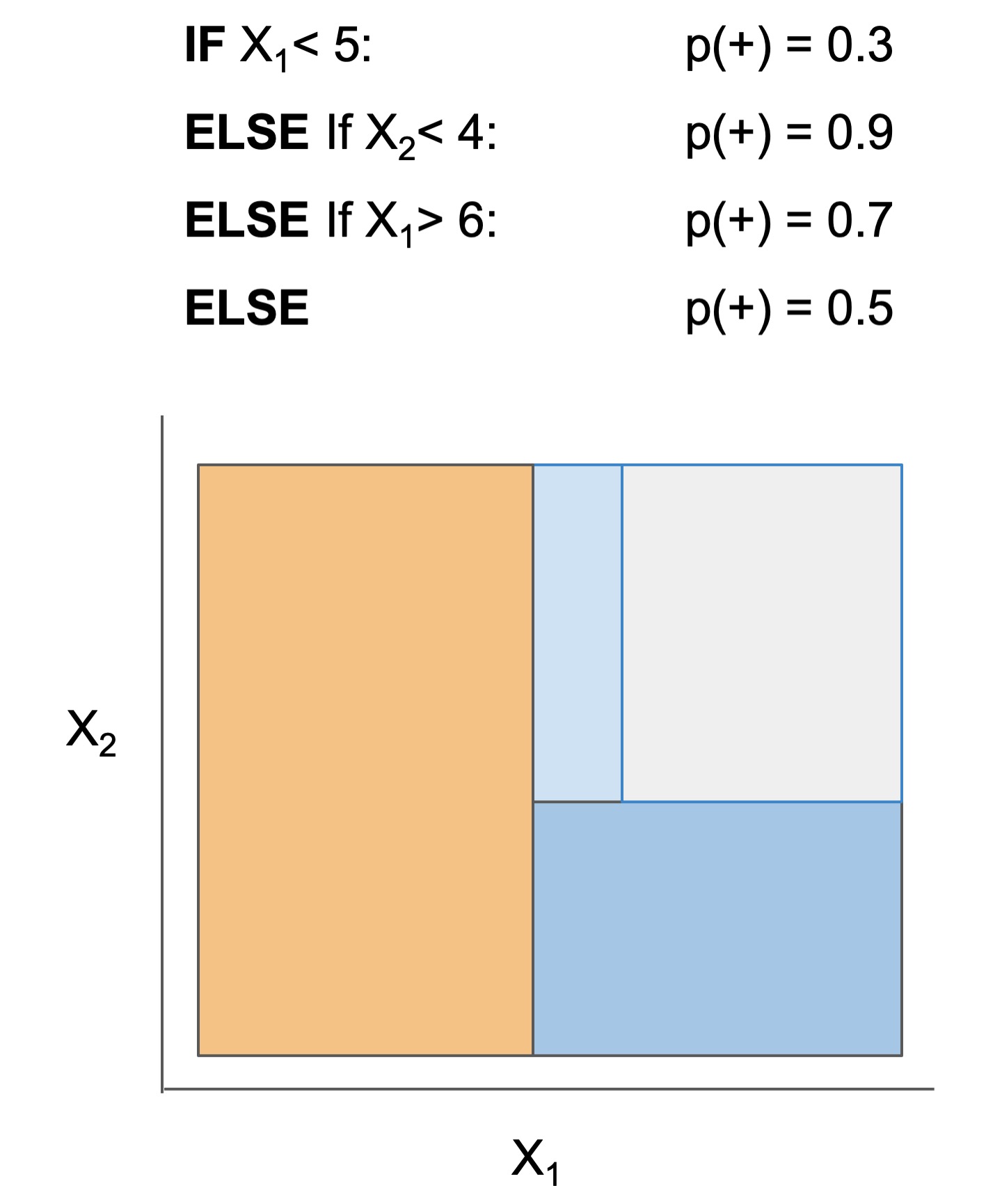

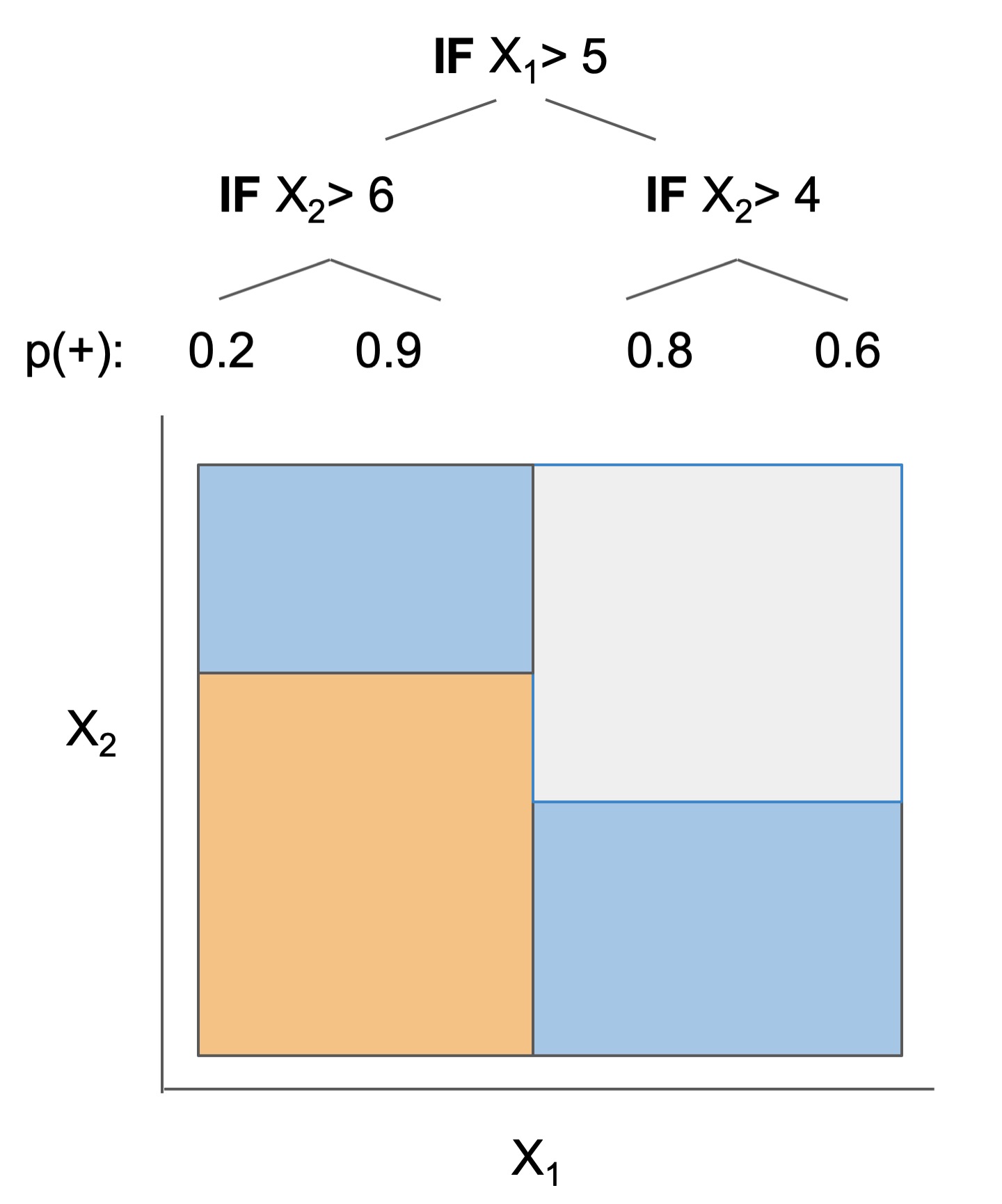

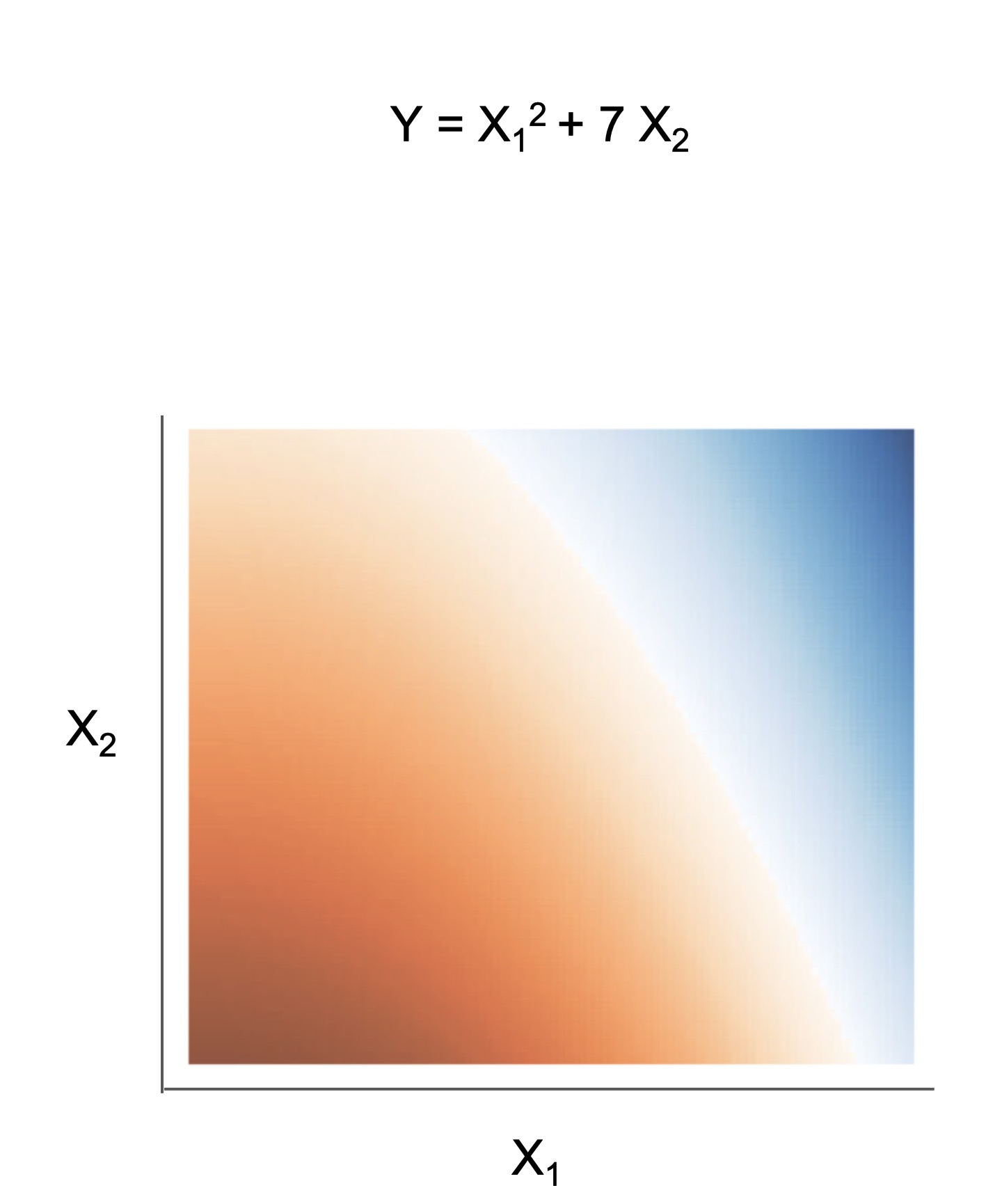

The final form of the above models takes one of the following forms, which aim to be simultaneously simple to understand and highly predictive:

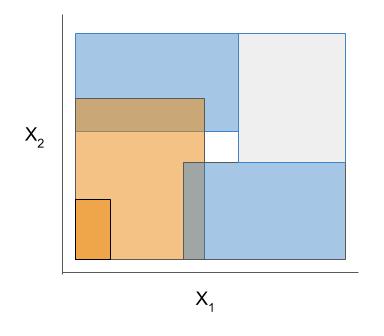

| Rule set | Rule list | Rule tree | Algebraic models |

|---|---|---|---|

|

|

|

|

Different models and algorithms vary not only in their final form but also in different choices made during modeling. In particular, many models differ in the 3 steps of the table below. For example, RuleFit and SkopeRules differ only in the way they prune rules: RuleFit uses a linear model whereas SkopeRules heuristically deduplicates rules sharing overlap. As another example, Bayesian rule lists and greedy rule lists differ in how they select rules; bayesian rule lists perform a global optimization over possible rule lists while Greedy rule lists pick splits sequentially to maximize a given criterion. See the docs for individual models for futher descriptions.

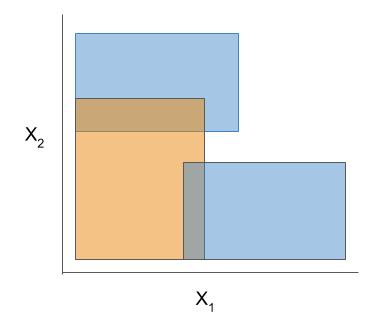

| Rule candidate generation | Rule selection | Rule pruning / combination |

|---|---|---|

|

|

|

The code here contains many useful and readable functions for rule-based learning in the util folder. This includes functions / classes for rule deduplication, rule screening, converting between trees, rulesets, and neural networks.

Demos are contained in the notebooks folder.

imodels for deriving a clinical decision ruleFor updates, star the repo, see this related repo, or follow @csinva_. Please make sure to give authors of original methods / base implementations appropriate credit!

Press p or to see the previous file or, n or to see the next file

Are you sure you want to delete this access key?

Are you sure you want to delete this access key?

Are you sure you want to delete this access key?

Are you sure you want to delete this access key?